How to write better transparency reports

I'm Alice Hunsberger. Trust & Safety Insider is my weekly rundown on the topics, industry trends and workplace strategies that trust and safety professionals need to know about to do their job.

This week, I'm thinking about the history of transparency reports, how I'd approach them if I were at a platform with unlimited resources and freedom, and who the intended audience should really be.

Read to the end of today's T&S Insider for links to resources on safe gaming, open source tooling, and surviving a layoff, as well as my most recent podcast episode.

Get in touch if you'd like your questions answered or just want to share your feedback. Here we go! — Alice

Today’s edition is in partnership with Safer by Thorn, a purpose-built solution for detection of online sexual harms against children

New look! Safer launched in 2019 with our hash matching solution. Since then, we’ve added scene-sensitive video hashing, a CSAM classifier, a text classifier, the option for cross-platform hash sharing, new reporting options, and more. With our product offering expanding, it was time to give Safer a new look – one worthy of our innovation and expertise.

We are committed to equipping trust and safety with purpose-built solutions and resources to protect children from sexual harms in the digital age.

Old vs new transparency reports

The big transparency report deadline in April got me thinking about the information platforms and other online intermediaries have made available to the public. What started as a trickle 15 years ago has become a flood as regulation has made it part of the job of T&S and compliance teams.

However, I’m not sure many platforms have considered why they produce transparency reports and what story they want to tell in the voluntary ones that some still write. So, I thought I’d go back to the beginning.

A quick history of transparency reports

Historically, transparency reports were voluntary disclosures designed to mitigate users' concerns about data privacy and government requests for data. In 2010, Google became the first internet company to publish one about government data requests, an effort to shed light on the scale and scope of government requests for censorship and data around the globe. Other companies started to follow suit, and slowly, this scope broadened, with Etsy becoming the first company to share information on its policy enforcement practices in 2015.

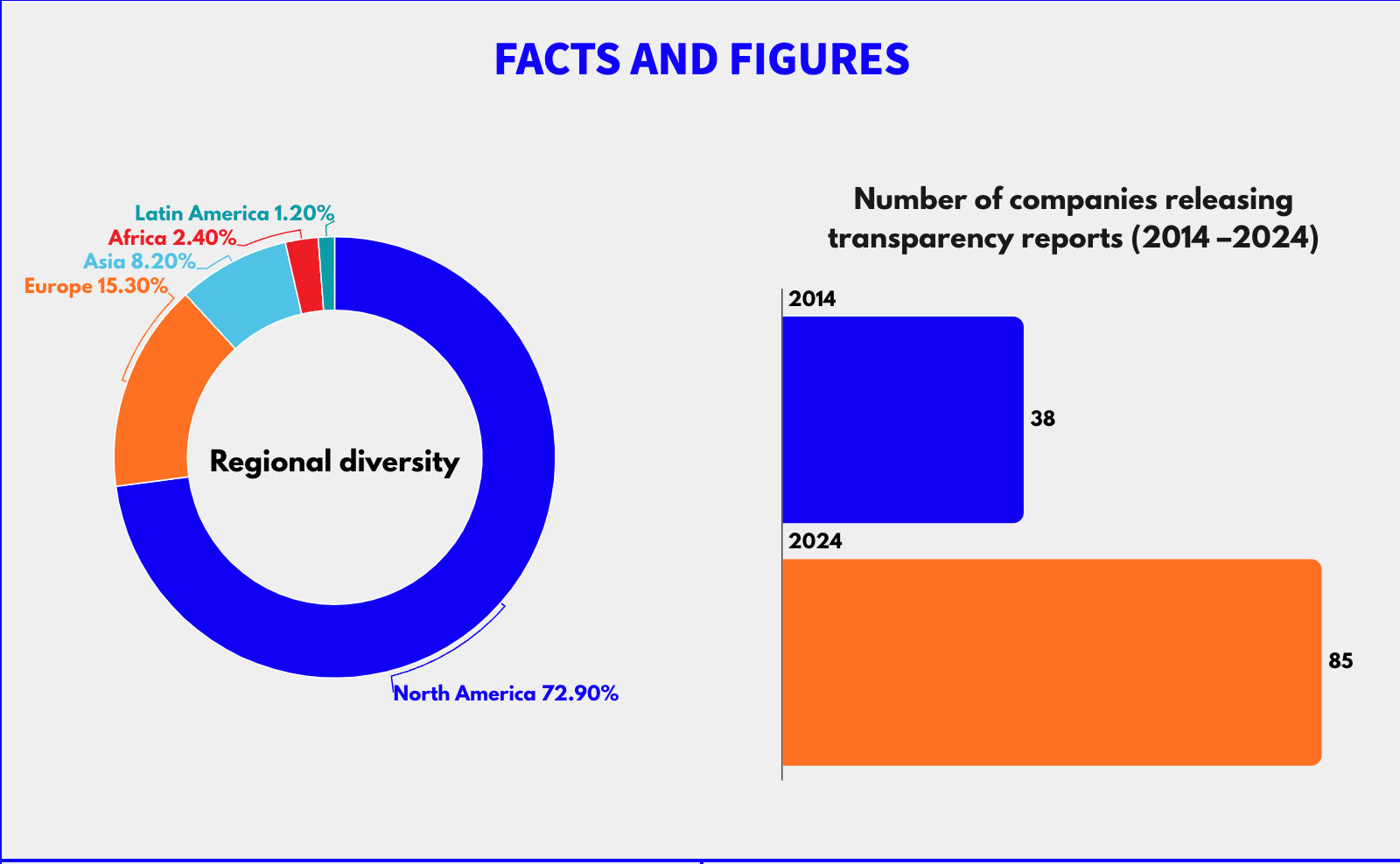

The Transparency Reporting Index (TRI) from Access Now notes that 85 companies released voluntary transparency reports in 2024. Companies use these reports to discuss threats to their work more broadly, signal company values and investments, and celebrate the work of Trust and safety teams. This is a good thing; it means that companies have invested in T&S, take it seriously, and (at the very least) give teams the space and resources to publicise their work.

However, these reports are essentially PR releases, with the information carefully curated and the narrative tightly controlled. Further, the data isn’t standardised across platforms, nor is it audited, so the reports aren’t particularly helpful for researchers or regulators who are trying to find what isn't being done well. They’re also often dense .pdf files that are buried in company press release pages, never meaningfully digested by the platform’s users, media or other key audiences. As Ofcom’s transparency 2021 research report noted: “There is a significant difference between genuine or ‘meaningful’ transparency initiatives that add value vs transparency for show or ‘theatre’”.

Why some platforms don’t submit transparency reports

Many online platforms don’t release transparency reports at all. The dating app industry, for example, has declined to file transparency reports across the board. Dating apps are simultaneously criticised for collecting too much data while also being told they’re not collecting enough data to keep users safe. It’s a difficult balance that has made the industry shy away from revealing its inner workings.

As head of T&S at Grindr, I dipped my toe into being transparent about Trust & Safety through quarterly “Voice of the Customer” blog posts (example), highlighting what we were hearing from customers and what we’d been working on and improving. However, this was a far cry from a full transparency report.

For small companies, transparency reports are a significant undertaking which requires resources and a clean set of data to work from. For example, if Grindr had wanted to talk about its work reducing user reports, it would have needed to collate and make sense of reports coming from a variety of sources (in-app, email, helpdesk forms). Pulling all that together, reporting on it meaningfully, and giving context to the information is easily a full-time job for several people – a real commitment for a lean startup.

Obviously, regulations like the Digital Services Act and the Online Safety Act in the UK have now mandated transparency. Platforms have been forced to invest in headcount and data flows that allow them to easily answer regulatory questions. But it leaves a question about the voluntary reports — who are they for and what should they be in a world of frequent regulatory disclosures?

Who should benefit from transparency reports

Although Ofcom’s report is referring to regulatory transparency reporting, I like the criteria that it lays out for meaningful transparency disclosures: “Firstly, does the information disclosed mean anything to the intended audience? Secondly, will this type of information lead to effective outcomes for transparency and accountability?”

Some platforms seem to forget this: the primary audience for transparency reports should be the users of a platform. By communicating directly with users about safety initiatives, platforms can build trust and set realistic expectations so that users can make informed decisions.

The reason why platforms get pressure from regulators, journalists, and advocacy organisations is that users turn to these outlets to complain when they don’t get meaningful information from the platforms themselves. Transparency reports can absolutely be organised as PR packages for journalists, or as compliance reports for regulators, but they need to be written for real users as well.

So, in no particular order, here are three things that I'd consider if I was creating a voluntary transparency report: