Trust & Safety is how platforms put values into action

I'm Alice Hunsberger. Trust & Safety Insider is my weekly rundown on the topics, industry trends and workplace strategies that trust and safety professionals need to know about to do their job. This week, I write about:

- Why platforms need to be more explicit about their values when explaining Trust & Safety policy and enforcement

- The resources I wish I'd had when I started in Trust & Safety

Get in touch if you'd like your questions answered or just want to share your feedback. Here we go! — Alice

Why platforms need to be more explicit about their values

The practice of Trust & Safety is, in its broadest definition, how companies put their values into action. It’s not just what they say, but what they actually do.

A lot of emphasis is put on the act of content moderation — the recent supreme court cases brought by Florida and Texas are an example of this — but content moderation is just one part of a larger T&S strategy designed to reinforce specific company values. Each one is an editorial decision (see: the argument made by the platforms in the NetChoice cases) that shapes what the platform feels like and the types of people that want to spend time there.

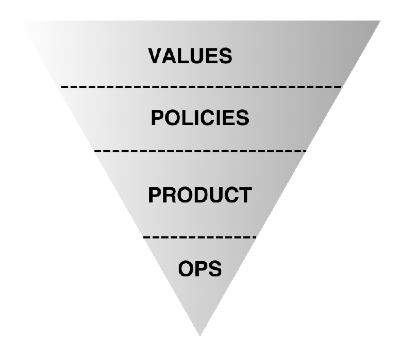

Most of you will be familiar with how the process works but, for those who don't, it goes something like this:

- Platforms start with defining their particular values then write policies that are aligned with those values

- They create product features and interventions to reinforce those policies

- Then they finally resort to operations — often human moderators — to enforce the policies

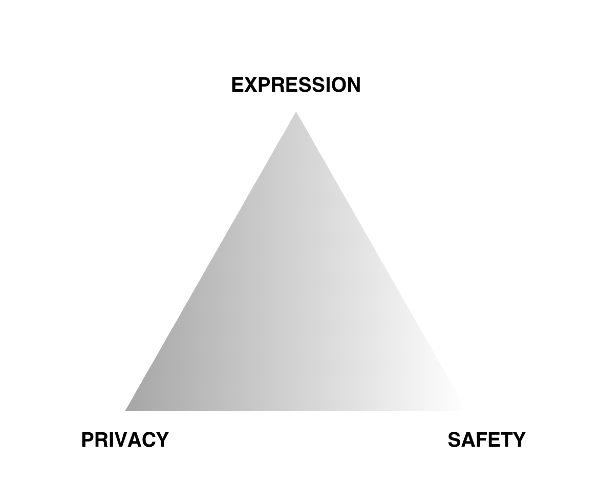

It is through this process that companies must decide how they balance the sometimes competing values of privacy, safety, and self-expression. For example: Signal values privacy and has full end-to-end encryption in messages, whereas Tinder puts more value on safety and proactively scans messages for harmful content. X/Twitter values self expression and has a freedom of speech, not reach policy, whereas Pinterest limits certain kinds of content in order to create a safer, more positive space.

We even can see the manifestation of values in specific policy areas. Here's a recent example: X/Twitter removed their policy against deadnaming last year under Elon Musk's leadership, only to revive it again a little over a week ago, coinciding with a campaign from GLAAD. The back-and-forth around this policy shows the tension between freedom of expression versus creating a space where LGBTQ+ users aren't targeted and harassed.

In the past, platforms have described themselves as a digital town square: a space where everyone can talk about anything. In reality, every platform goes through what Techdirt's Mike Masnick calls the Content Moderation Learning Curve before realising that tradeoffs must be made. And because users have different value systems, not everyone will agree with these decisions. There is no reality in which moderation decisions keep everyone happy all of the time.

Telling your moderation story

All social media platforms exercise their editorial discretion and curation of their value system through their Trust & Safety strategies and enforcement. However, they don't always talk about it that way.

When I worked at Grindr, I tried to be as explicit about this as possible. I rewrote the Community Guidelines (released just this month) to highlight which values were important in the policy documentation. Instead of writing a long list of things that are not allowed (censorship!), I focused on what Grindr's values are, and what behaviour was encouraged.

This values-based communication was an attempt to create a specific kind of space for people who share the same value system. The subtext is: if you don't agree with these values, this might not be the place for you.

What does this mean in practice? As users recognise that it is impossible for any platform to be truly neutral, companies have the opportunity to more explicitly explain what their values are, how they reinforce those values, and why. Think about how Bluesky has talked publicly about "composable moderation" and the protocols that underpin it.

Adopting a values-forward stance in this way makes a strong case for protecting Trust & Safety work under the First Amendment, and allows users to decide if that space is aligned with their own personal values. In doing so, they can decide if it's a space that's right for them.

Sometimes, it might not be, and that's ok too. But it's only through proactive communication of those values that users can decide.

You ask, I answer

Send me your questions — or things you need help to think through — and I'll answer them in an upcoming edition of T&S Insider, only with Everything in Moderation*

Get in touchCareer resources

As I sit down to write this, it's International Women's Day. Navigating my career as a woman in tech hasn't been easy. When I started working at a tech startup in 2010, I was almost always the only woman in the room. It wasn't until 2017 that another woman joined me in leadership meetings, and it wasn't until 2023 (yes, last year!) that I had a female manager for the first time.

I remember recommending my workplace to other women by saying, with astonishment, "the men usually listen to you and actually respect your opinions!" Sadly, that's not a given in a tech company, and still something that I check for when vetting organisations today.

It didn't help that the practice of Trust & Safety was extremely new. So, while I have had the fortune of leading T&S teams at some of the internet's largest companies, it also means that I have never had a mentor in the field and I have never directly reported to another T&S professional. Essentially, I have had to figure it out as I go.

Luckily, things have evolved, even though they're still not perfect. Our industry has communities like the Integrity Institute and the Trust & Safety Professional Association, and T&S professionals are some of the most diverse, inclusive, and thoughtful folks around.

A new resource — Leadership Advice for New Trust & Safety Leaders by Bri Riggio — outlines helpful advice I wish I'd had back then. Riggio interviewed 10 leaders in T&S and organised their advice and wisdom into themes, including actionable advice and tips. There's great advice about avoiding burnout, managing teams, aligning to company values, and, in particular, seeking out help:

While easier said than done, building a support network for yourself will be critical for longer-term resilience and sustainability in this work. Having others to lean on can also help you become a better advocate and leader for others.

Being a mentor has been one of the most rewarding things I've done in my career, and it's been an honour to provide support and advice to others. If you're looking for mentorship (or to be a mentor), here are some T&S mentorship programs:

- Trust & Safety Coffee Chats from the Trust & Safety Professional Association - many of the mentors are open to talking with folks who aren't TSPA members. You can book a mentorship chat directly from each mentor's page.

- Responsible Tech Mentorship Program from All Tech is Human - this is an organised programme where mentees are matched in a group with a mentor, meeting regularly.

- T&S Mentorship Match from Jeff Dunn - an informal spreadsheet of mentors who are willing to be contacted. If you want to sign up to be a mentor, you can do so here.

Also worth reading

Instagram's uneasy rise as a news site (New York Times)

Why? This highlights some of the struggles in defining what a platform is for, what their values are, and how to enforce. Instagram leadership has said they want to de-emphasise news content, but their users often go to their platform for news.

The Known Unknowns of Online Election Interference (The Klonickles)

Why? In a year of global elections, technology is accelerating the potential for election interference. Two emerging areas to watch: the deceptive power of AI generated audio, and the effects of social media platform decentralisation.

Meta's Data Dance with it's Oversight Board (Platformer)

Why? Meta's Oversight Board is a promising model for platform governance oversight, but in order for them to know if their recommendations are being put into action, they need access to data. Meta isn't always forthcoming.

Member discussion