It's time to end the 'default male'

I'm Alice Hunsberger. Trust & Safety Insider is my weekly rundown on the topics, industry trends and workplace strategies that trust and safety professionals need to know about to do their job.

This week's edition is a day late because it was a holiday yesterday — thanks for understanding. Today, I'm thinking about:

- How and why the video demos of OpenAI's GPT-4o left me feeling unsettled

- A way you can help the understanding of T&S online

🥳 Next week's newsletter is the 250th edition of EiM and, to mark the occasion, Ben and I are hosting a subscriber hangout on Friday at 10am EST / 3pm BST. The plan is simply to have chat and see folks are working on. If you're free, share your name and email address and we'll wing across an invite. There are some very cool friends already attending so hope you can join us!

Get in touch if you'd like your questions answered or just want to share your feedback. Here we go! — Alice

I'm not a man who needs his ego stroked, so don't treat me like one

Everyone is talking about the OpenAI demo videos of GPT-4o, and I was certainly impressed when I saw them, but I was left with one clear feeling: this isn't meant for me.

That's because the video and voice AI-human interactions appear to be designed for men. One voice model sounds suspiciously like Scarlett Johansson, and the conversational tone and inspiration clearly came from the movie Her – which was confirmed by Sam Altman tweeting the word "her" as GPT 4o was released. TechCrunch has more:

OpenAI’s demo last week aimed to showcase the chatbot’s enhanced conversational abilities but went viral after the sultry voice giggled at almost everything an OpenAI employee was saying. At one point, the chatbot told the employee: “Wow, that’s quite the outfit you’ve got on.” At another point, the chatbot said, “Stop it, you’re making me blush” after receiving a compliment.

I watched one video that showed a man prepping for a job interview. He asks the AI for feedback on how he looks, and the AI responds by laughing and teasing him a bit, and gently suggesting that he runs a hand through his hair. This is not the way women talk to each other, nor is it the way that men talk to each other. This is the way that a woman talks to a man when she’s trying to tell him something he doesn’t want to hear without making him angry.

Unfortunately, this is not super suprising. The concept of the “default male” — when products or experiences are designed to be one-size-fits-all — has existed for decades. It comes about because researchers often exclude women and non-binary people out of studies and surveys, and therefore don’t consider their needs and wants. Some famous physical examples of this are seatbelts and cellphones (although we got good news on seatbelts this week).

AI as a reflection of society?

At this point in the AI hype cycle, no one wants to feel as though the AI is more powerful than us. The human should be the one in control: they are independent and ambitious.

Unfortunately, our society is patriarchal, and AI must fit into our very real social norms. So the human in control is presumed to be a man. The AI becomes a way to help them, to cooperate and to be empathetic to the human’s wants or needs.

That's why we end up in a situation where male traits are assigned to the human, or user, and female traits to the AI. It's also why the conversation was very familiar to me but also mde me supremely uncomfortable. No one no one talks to me this way in real life, nor would I let them.

The double bind

These power dynamics — the ones that AI replicates, but which feel eerily like a 60’s secretary flattering her big boss manager— are ones that I have never personally experienced. I refuse to talk to men, or anyone for that matter, in that way and no one has ever talked to me that way either. However, because I am a woman in leadership, I am one of the millions of women around the world in a double bind.

If we are assertive, independent, and decisive (stereotypically male traits), we are seen as competent, but we are disliked. If we are empathetic, and cooperative (stereotypically female traits), we are liked, but seen as incompetent. I have had to tread a very careful line throughout my career, and although I am naturally very direct and decisive, I soften that quite a bit so that I’m still liked and because being liked is important to me. In turn, I’ve been called a “cheerleader” and that I need to work on my executive presence. I — like many women — can’t win.

As Vox explains, these problematic gendered perceptions are also why Siri and Alexa have female sounding voices:

Research shows that when people need help, they prefer to hear it delivered in a female voice, which they perceive as non-threatening. (They prefer a male voice when it comes to authoritative statements.) And companies design the assistants to be unfailingly upbeat and polite in part because that sort of behavior maximizes a user’s desire to keep engaging with the device.

I don't need my ego stroked

The thing to understand about AI is that it’s essentially a stereotype of human interactions, based on aggregated data from our real, messy, human selves, with embedded bias and power dynamics included. Of course a conversational AI assistant replicates the dynamic between a man and a woman. Of course AI descriptions of gender or ethnicity feel like stereotypes. It’s because they are stereotypes.

I wanted to play more with the 4o video chat capabilities that are demoed in OpenAI’s promo videos to see if they really feel as grating live as they did in the videos, but unfortunately they haven’t been released yet. In the version I have access to, AI voices can respond to my questions, but they are reading transcripts from text. The text transcripts are in the voice and tone that we’ve come to recognize in AI: direct, professional, and neutral.

I feel comfortable with this kind of AI conversation because it doesn’t mimic how real human conversations feel. It's not realistic, but I'd prefer a conversation that feels off because it's inhuman to one that feels off because it's talking to me like I'm a man who needs his ego stroked.

A better default is no default

I've long-argued that there is no such thing as "neutral" when it comes to platform policy. Platforms must act on their values and be explicit about what those are, and allow for as much customization as possible. I love the community moderation models of Reddit and Discord, and I'm excited about innovations like Bluesky's Stackable Moderation, which allows people to create or sign up for specialised, niche moderation applications.

I believe the same approach is needed for AI. When designing products for a diverse, global audience, the answer cannot be to reduce all of humanity to one experience. There is no “default person” and so maybe there should be no “default model”.

Imagine a future where there is more transparency around the data that models are built on, who they represent, and what kind of interactions they replicate, instead of pretending we’re all the same and want the same things. What if instead of releasing different voices for one AI model, OpenAI released different models based on more niche datasets? I’d like to try a model that is based on data of women or queer people talking to each other. Think how different that might feel.

Our society associates women with traits like care for others, fostering community, balancing power dynamics, and understanding how people relate to each other. The idea of centering an experience of relationality, safety, and equity is a feminist one, and this is what I believe Trust & Safety to be truly about. It’s no surprise then that we’re seeing such a backlash against T&S in our society, yet it also may be why T&S is less male-dominated than many other fields in tech.

I believe there is enormous opportunity in this: we can use these ways of seeing the world (no matter our gender!) to create ways of interacting with each other and with technology that not only acknowledge our differences but celebrate them. Instead of flattening all of humanity into one default experience, we craft a multitude of versions that speak to a wide array of life experiences.

But to do that, we need to end the era of the 'default male'.

You ask, I answer

Send me your questions — or things you need help to think through — and I'll answer them in an upcoming edition of T&S Insider, only with Everything in Moderation*

Get in touchA call to action

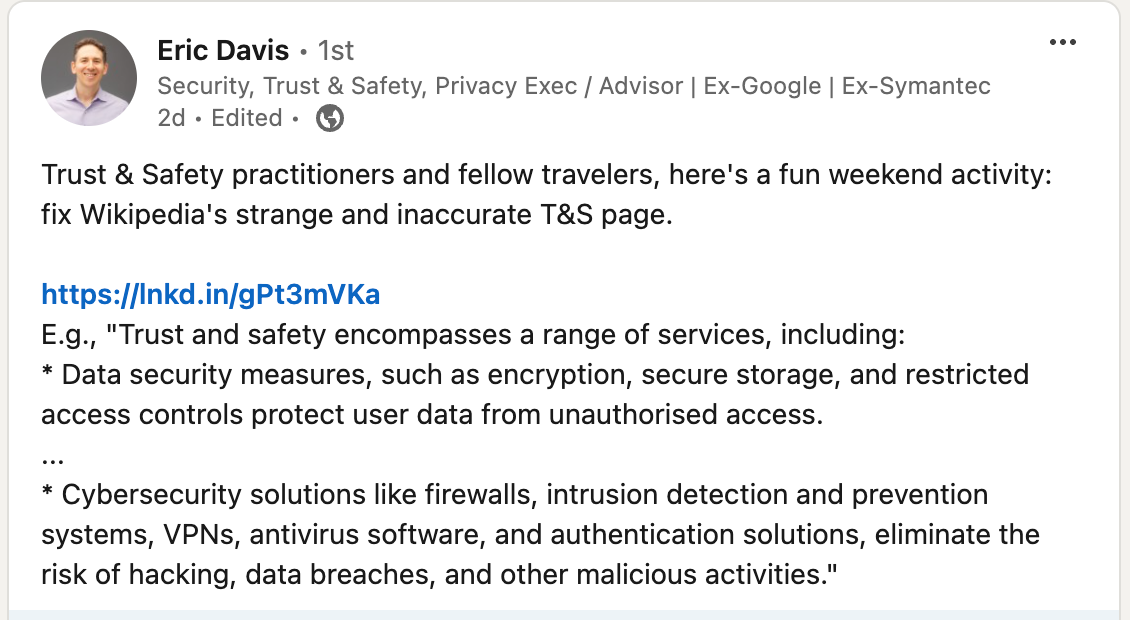

If you have some free time, consider fixing the Trust & Safety Wikipedia page! We know people misunderstand T&S all the time, and Wikipedia is often a first go-to for people trying to learn more. Let's get this updated, shall we?

Also worth reading

Google’s “AI Overview” can give false, misleading, and dangerous answers (Ars Technica)

Why? This is a really comprehensive article showing the ways that AI summaries can fail. (One great example is summaries about people who have the same name. Turns out, there is another Alice Hunsberger who is a professor of Islamic studies, and AI summaries conflate the two of us.)

Has Social Media become less informative in the last year? (Psych of Tech)

Why? Nextdoor & Pinterest lead the way in becoming more informative places for users; YouTube remains the most informative platform.

Five Section 230 Cases That Made Online Communities Better (TechDirt)

Why? Folks spend so much time talking about how Section 230 is bad and why it should be repealed; instead we should talk about how it's made things better.

Member discussion